SCOTUS Declines Facebook Case, Thomas Calls for Review of Section 230

WASHINGTON — The United States Supreme Court has declined to hear an appeal from a Texas woman who claims that Facebook and its parent company, Meta, are liable for facilitating child sex trafficking. The filing, Jane Doe v. Facebook Inc., was denied a writ of certiorari (a request to seek further judicial review by a higher court) meaning that the case questioning Facebook’s liability under Section 230 is blocked.

WASHINGTON — The United States Supreme Court has declined to hear an appeal from a Texas woman who claims that Facebook and its parent company, Meta, are liable for facilitating child sex trafficking. The filing, Jane Doe v. Facebook Inc., was denied a writ of certiorari (a request to seek further judicial review by a higher court) meaning that the case questioning Facebook’s liability under Section 230 is blocked.

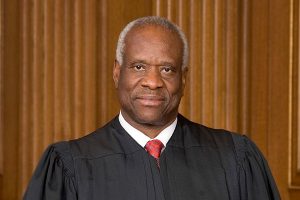

Jane Doe’s counsel filed the writ with the high court in September of 2021. This order was denied, with the conservative-leaning Justice Clarence Thomas asking Congress to revisit the scope of Section 230 of the Communications Decency Act of 1996.

“Here, the Texas Supreme Court recognized that “[t]he United States Supreme Court—or better yet, Congress—may soon resolve the burgeoning debate about whether the federal courts have thus far correctly interpreted Section 230,” Justice Thomas argues. “Assuming Congress does not step in to clarify…230’s scope, we should do so in an appropriate case.”

This is a noteworthy development for the adult entertainment industry and the broader online sector. Section 230 is often referred to as “First Amendment of the Internet” or “the twenty-six words that created the internet.” Crucially, Section 230 states that “no provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.”

The Supreme Court and courts at all levels of the US judicial system have repeatedly affirmed that Section 230 provides a vast third-party liability shield that protects interactive computer services, like an adult-focused porn website or a social network like Twitter, from the liability of a third-party user who takes advantage of such venues for illegal purposes. These include cases of trafficking.

Jane Doe v. Facebook Inc. highlights a case where a third-party user took advantage of Facebook to recruit a minor into sexual exploitation. There is no doubt that Doe was wronged and the perpetrator of those crimes should be punished to the fullest extent of the law. Holding a platform vicariously liable for the actions of its users would be a damaging precedent, as it would discourage platforms from allowing user-generated content (UGC), or force them to closely scrutinize every upload to and communication across the platform.

For large, popular social media platforms — and adult sites that receive a substantial number of UGC uploads — performing a detailed prior review of content uploaded and communications sent essentially would make it impossible to function as they currently do, imposing a burdensome duty of prior review. Twitter, for example, was clocking approximately 6,000 tweets per second as of May 2020, which translates to roughly 500 million tweets per day. Prescreening such an enormous number of daily communications would be challenging, to put it mildly.

Clarence Thomas official portrait by Steve Petteway, from the Collection of the Supreme Court of the United States