Instagram To Demote Compliant Content It Determines To Be ‘Inappropriate’

We in porn are well aware of the world’s uneven policy creation and enforcement when it comes to industry-related and industry-adjacent businesses and humans.

We in porn are well aware of the world’s uneven policy creation and enforcement when it comes to industry-related and industry-adjacent businesses and humans.

Social media, especially Instagram, has emerged as one of the most troubling spaces — a place where a naked “influencer” is lauded and a humorous manatee (read: performer Missy Martinez) is shut down without recourse. Yesterday, Instagram upped the ante by announcing policies that will inevitably further downgrade the app’s sex worker inclusivity and usefulness.

Per TechCrunch, Instagram will now demote content it determines to be “inappropriate.” The app has “begun reducing the spread of posts that are inappropriate but do not go against Instagram’s Community Guidelines.” In other words, if a post is sexually suggestive (Who decides what counts as “sexually suggestive”?) but doesn’t depict a sex act or nudity, it can get demoted.

Instagram continued, “This type of content may not appear for the broader community in Explore or hashtag pages.”

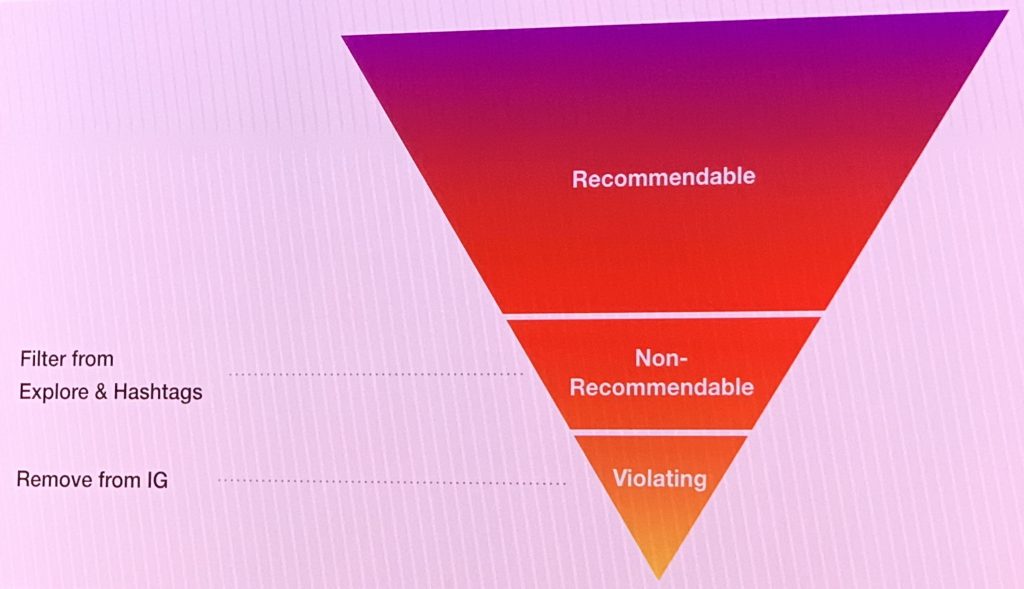

The graphic below showcases the new in-between category for content — not in outright violation, but not Insta-loved either.

Demoted content will be seen by fewer people, which will obviously impact creators’ capacity to gain new followers. And mark my words – it’s not yoga pants models that are going to have to worry about this. “Known” members of the adult community trying to connect with fans within the confines of Insta-policy, though, likely will.

TechCrunch also suggested that if content, like a meme, doesn’t constitute hate speech or harassment, but is considered to be in bad taste (Who decides what counts as “bad taste”?), lewd, violent or hurtful, it could also get demoted.

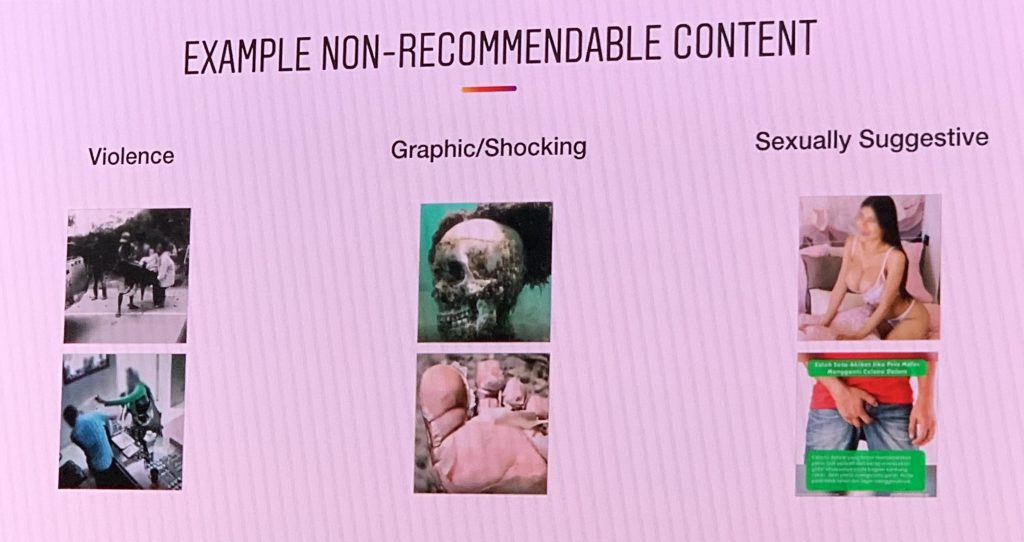

The graphic below showcases more detailed examples of “non-recommendable” in-between content.

“We’ve started to use machine learning to determine if the actual media posted is eligible to be recommended to our community,” TechCrunch reported Will Ruben, Instagram’s product lead for Discovery, saying.

To these ends, Instagram is training content moderators to label “borderline content” when looking for outright policy violations. They will then use those labels to train an algorithm to identify demote-able content. These posts won’t be fully removed from the feed, just hidden or obscured in a way that sounds very much like shadow banning.

TechCrunch also reported that, for now, the new policy will not impact Instagram’s feed or Stories bar. This means that existing followers will see what someone posts, just not people searching or browsing.

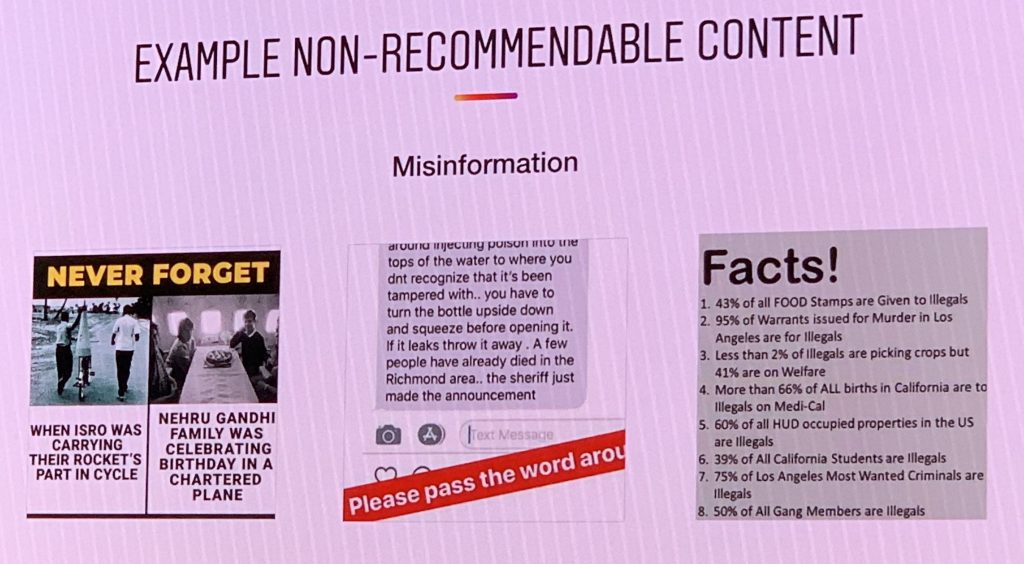

The graphic below showcases further examples of “non-recommendable” in-between content.

Mashable tech reporter Karissa Bell was at Facebook’s headquarters on April 10 when this new policy, along with a bunch of Facebook stuff, was announced. Bell also sees the shadow banning similarities.

Bell wrote in a tweet that “Content that’s deemed ‘low quality’ is also ‘non-recommnenable’ and will not appear in explore or hashtag pages – note this sounds very similar to supposed ‘shadow bans’ IG creators have been talking about for a long time.” (sic)

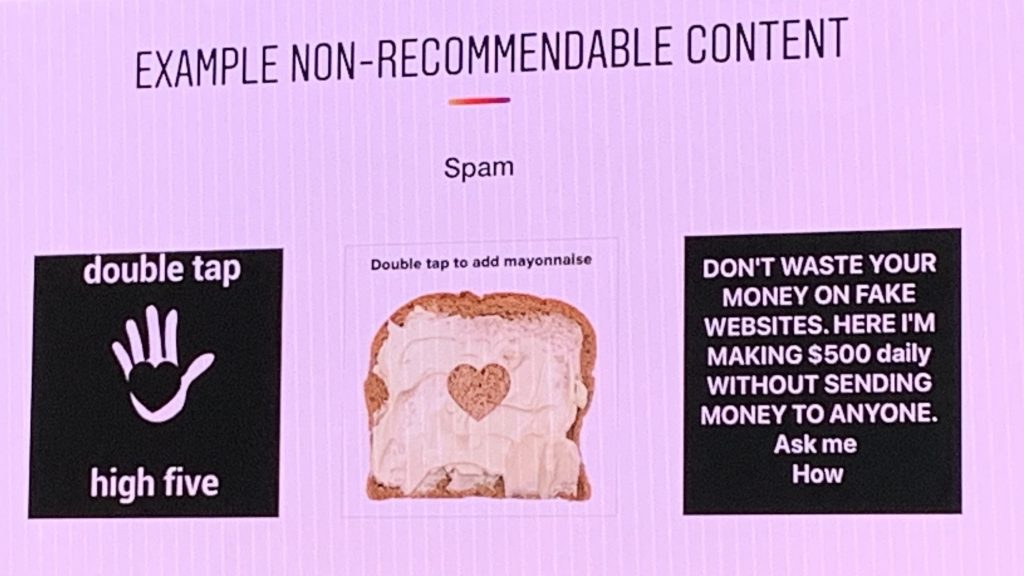

The graphic below showcases further examples of “non-recommendable” in-between content.

All this leaves me wondering, yet again: Why do we in the community bother with Instagram at all?

Like their parent, Facebook, Instagram does not like the adult industry community – though they must like all the traffic and content adult industry community members certainly bring to their spaces — nor do they pretend to. Users who are also community members, especially performers and models, are stuck in a rock/hard place situation wherein Insta is a necessary evil.

But, again, I ask – is it?

I have given up on Instagramhttps://t.co/QPC3nlJ7RI

— Amberly Rothfield (@AmberlyPSO) April 11, 2019

Images via TechCrunch.