Chinese Programmer Claims He’s Built Facial Recognition for Porn

A user of Weibo, a Chinese social network, claims to have built a database that combs porn websites with facial recognition software, thus enabling users to identify whether any woman on social media has appeared in porn.

A user of Weibo, a Chinese social network, claims to have built a database that combs porn websites with facial recognition software, thus enabling users to identify whether any woman on social media has appeared in porn.

Yiqin Fu, a PhD candidate in political science at Stanford and a Twitter user, translated the tweets into English and posted on Twitter, saying that the original poster claims to have “successfully identified more than 100,000 young ladies from around the world, cross-referencing faces in porn videos with social media profile pictures. The goal is to help others check whether their girlfriends ever acted in those films.”

The tweet thread came from a Weibo user who claims to be based in Germany, and who says everything he’s done so far is legal, “because 1) he hasn’t shared any data, 2) he hasn’t opened up the database to outside queries, and 3) sex work is legal in Germany, where he’s based.”

Reactions on Twitter have been dubious—not only as to whether this database in fact exists (as no proof has been provided), but also as to whether its supposed users have girlfriends to check up on in the first place. When Vice’s Motherboard contacted the user for verification, they reported, “He said they will release ‘database schema’ and ‘technical details’ next week, and did not comment further.”

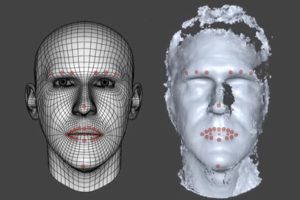

Whether the Weibo user is telling the truth about his accomplishment or not, it’s sparking controversy. After all, Motherboard’s Samantha Cole pointed out, “The ability to create a database of faces like this, and deploy facial recognition to target and expose women within it, has been within consumer-level technological reach for some time.” So whether the OP has actually created one or not, the idea is out there, and it’s likely that someone will put it to use.

Soraya Chemaly, author of Rage Becomes Her, tweeted on Tuesday, “This is horrendous and a pitch-perfect example of how these systems, globally, enable male dominance. Surveillance, impersonation, extortion, misinformation all happen to women first and then move to the public sphere, where, once men are affected, it starts to get attention.”

Multiple other Twitter users are outraged at the misogyny inherent in this use of machine learning, too. User @hacks4pancakes wrote, “This is going to get people blackmailed, assaulted, and killed. Good going, chuckleheads.” And user @banhbaodown tweeted, “As a programmer, I believe we’re supposed to help keep people and their identities safe, not expose them. This puts so many women in danger.”