Biden Pushes for Industry Action on AI Deepfakes Targeting Minors, Celebrities

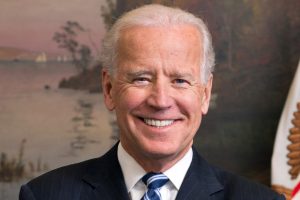

WASHINGTON — The Biden administration is reportedly urging the technology industry and financial institutions to address the burgeoning market of AI-generated sexual images.

WASHINGTON — The Biden administration is reportedly urging the technology industry and financial institutions to address the burgeoning market of AI-generated sexual images.

Advances in generative AI have simplified the creation of realistic, sexually explicit deepfakes, enabling their spread across social media and chatrooms. Victims, including celebrities and minors, often find themselves powerless to stop the dissemination of these non-consensual images.

In the absence of federal legislation, the White House is seeking voluntary cooperation from companies. By adhering to specific measures, officials hope the private sector can help curb the creation, distribution, and monetization of non-consensual AI imagery, particularly explicit content involving children.

“As generative AI emerged, there was much speculation about where the first significant harms would occur. We now have an answer,” said Arati Prabhakar, Biden’s chief science adviser and director of the White House Office of Science and Technology Policy.

Prabhakar described a “phenomenal acceleration” of non-consensual imagery driven by AI tools, largely targeting women and girls, profoundly impacting their lives.

“If you’re a teenage girl or a gay kid, these issues are affecting people right now,” she said. “Generative AI has accelerated this problem, and the quickest solution is for companies to take responsibility.”

A document shared with the Associated Press before its release calls for action from AI developers, payment processors, financial institutions, cloud computing providers, search engines, and gatekeepers like Apple and Google, who control app store content.

The administration emphasized that the private sector should “disrupt the monetization” of image-based sexual abuse, particularly restricting payment access to sites advertising explicit images of minors.

Prabhakar noted that while many payment platforms and financial institutions claim to prohibit businesses promoting abusive imagery, enforcement is inconsistent.

“Sometimes it’s not enforced; sometimes they don’t have those terms of service,” she said. “That’s an area where more rigorous action is needed.”

Cloud service providers and app stores could also restrict web services and applications designed to create or alter sexual images without consent.

The administration also advocates for easier removal of nonconsensual sexual images, whether AI-generated or real, from online platforms.

One high-profile victim of pornographic deepfakes is Taylor Swift. In January, her fans fought back against abusive AI-generated images of her circulating on social media, prompting Microsoft to strengthen safeguards after some images were traced to its AI visual design tool.

Schools are increasingly dealing with AI-generated deepfake nudes of students, often created and shared by fellow teenagers.

Last summer, the Biden administration secured voluntary commitments from major tech companies like Amazon, Google, Meta, and Microsoft to implement safeguards on new AI systems before public release. This was followed by an executive order in October aimed at guiding AI development to ensure public safety, addressing broader AI concerns, including national security, and detecting AI-generated child abuse imagery.

Despite these efforts, Biden acknowledged that AI safeguards need legislative support. A bipartisan group of senators is pushing for Congress to allocate at least $32 billion over three years for AI development and safety measures, though legislation on these safeguards remains pending.

Encouraging voluntary commitments from companies “doesn’t change the underlying need for Congress to take action,” said Jennifer Klein, director of the White House Gender Policy Council.

Existing laws criminalize making and possessing sexual images of children, even if they’re fake. This month, federal prosecutors charged a Wisconsin man with using AI tool Stable Diffusion to create thousands of realistic images of minors engaged in sexual conduct. His attorney declined to comment after his arraignment.

However, there is minimal oversight over the tech tools and services enabling such image creation. Some operate on obscure commercial websites with little transparency.

The Stanford Internet Observatory found thousands of suspected child sexual abuse images in the AI database LAION, used to train AI image-makers like Stable Diffusion.

Stability AI, owner of the latest versions of Stable Diffusion, stated it “did not approve the release” of the earlier model reportedly used by the Wisconsin man. Open-sourced models, publicly available on the internet, are challenging to control.

Prabhakar emphasized that the problem extends beyond open-source technology.

“It’s a broader issue,” she said. “Unfortunately, many people use image generators for this purpose, and we’ve seen an explosion. It’s not neatly categorized into open source and proprietary systems.”