The Darker Side of Cutting-Edge Technology

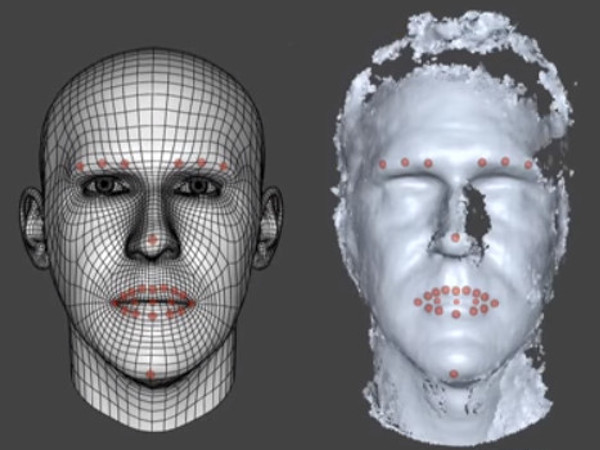

CYBERSPACE – Earlier this year, two enterprising adult entertainment companies added intriguing uses of facial-recognition technology to their websites. One app helps users find camgirls who match the user’s fantasy image. The other allows users to identify porn stars whose names they don’t know so they can search for other movies in which the performers appeared.

CYBERSPACE – Earlier this year, two enterprising adult entertainment companies added intriguing uses of facial-recognition technology to their websites. One app helps users find camgirls who match the user’s fantasy image. The other allows users to identify porn stars whose names they don’t know so they can search for other movies in which the performers appeared.

Both apps are tightly focused on a limited set of images and are used in ways that benefit both the searcher and the object of his desire.

In the wrong hands, though, the technology has a dark side … like matching porn stars’ on-screen images to the private citizens who inhabit their skin in real life.

Such a scenario looms over FindFace, an app developed by Russian company N-Tech.lab. The software was meant to help people make new friends or follow-up with someone they met at a gathering but don’t know how to contact. According to the company, the app has applications in dating, business relationships, criminology and crowd monitoring.

In November, FindFace won the international MegaFace Benchmark competition organized by the University of Washington, beating more than 100 rivals including Google. With 73.3-percent accuracy, the software matched faces in a database containing more than 1 million images.

Egor Tsvetkov, a Russian artist, worries technologies like FindFace can be dangerous. In March, he undertook a project in which he photographed random subway passengers and then used an early version of FindFace to match the images to profiles on Russia’s Facebook analog, Vkontakte. He was able to match 70 percent of the anonymous subway riders with their profiles.

The experience left him rattled.

“In theory, this service could be used by a serial killer or a collector trying to hunt down a debtor,” he told RuNet Echo.

He told Apparat.cc, “People today are losing the freedom to do something in public and be certain that no one will know about it. It’s pretty scary.”

Global Voices Advocacy Director Ellery Roberts Biddle finds the potential real-life implications of the technology more than alarming. She specializes in issues of online harassment and digital privacy.

“Context matters,” she said. “A photo of a woman in a pretty dress may seem perfectly innocent in the context of a social network, but when displayed in a series of images presented through a stalker-like lens, the image starts to say something different.”

Tying real-world identities to on-screen personas is especially dangerous for Russian porn stars, who face not only stalking, but also potential legal action. Distributing pornography is a felony in Russia.

Adult entertainment performers, like everyone else, have personal lives. Many use Vkontakte under their real identities to keep up with family and friends, just as American porn stars use Facebook. Both groups usually are careful to separate their private and public lives.

But here’s the catch: Like Facebook’s, Vkontakte terms of service require users to give up any expectation of privacy in images they display publicly on the network. That means FindFace and its users can process performers’ — and anyone else’s — private-life images whenever, wherever and however they wish. The developers have said they will have no choice but to cooperate with authorities investigating illegal activity.

“The only real way to protect yourself is to remember that everything you put online can become accessible to everyone,” legal analyst Damir Gainutdinov told Russian news site Meduza during an interview about FindFace and other potentially invasive technologies. “You can also turn to the law on personal data, which allows individuals to ask websites to report how exactly they manage their personal data. And you can also demand that they delete and cease processing this information.”